How to model humans digitally

Intro

You've definitely seen the recent high-quality 3D humans in animations and games. But have you ever considered how those were actually implemented? If you've never looked into this topic, you'd probably think an artist sits down and designs each human from scratch in a 3D modeling tool. If you thought that, you'd be somewhat correct. But designing a human from scratch every single time would be a nightmare, don't you think?

Turns out we can leverage math (shocker, I know) to design a general human body model. Even though there are countless human body types, the overall distribution of human body shapes isn't actually that crazy and can be captured with some neat statistical techniques. I'll cover the essential non-deep learning approaches in this post and dive into cutting-edge research in later ones.

The Human Body

Even though we won't be looking at deep learning approaches, if we want to create a statistical representation of a human body, we'll need high-quality 3D human body scans. And that's where one of the main blockers in this area comes from. You'd need to collect this dataset of humans with their permission using expensive 3D scanning rigs. As you can see, building anything non-commercial or for research becomes quite expensive. An example dataset would be from Render People.

There are many other considerations when collecting a dataset like this. Compared to 2D, 3D datasets tend to be much larger and more difficult to achieve good coverage of your potential distribution unless you impose certain limitations. Luckily, in our case, the limitation is that we only focus on humans. Even then, there's the problem of poses, skin color, hair, and age. We'll ignore clothing and hair for this post. Hair and clothing are themselves open research topics that are currently being worked on. Let's say we have the dataset ready, what’s next?

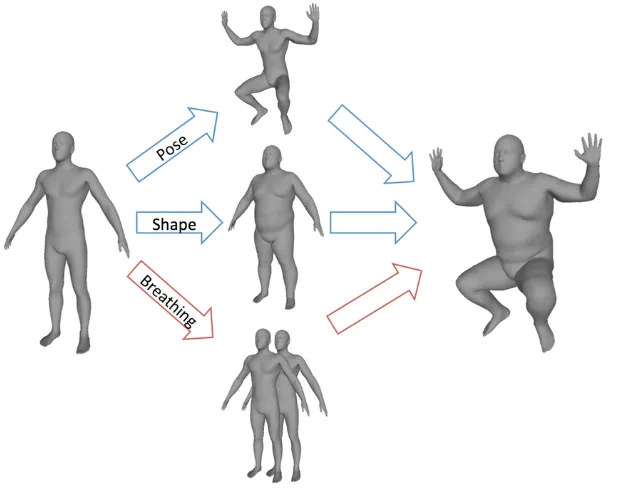

Parametric Models

Drumroll please... We'll use linear algebra and statistics. To keep things simple, let's focus on the shape of the body first. Assuming we have a dataset of human bodies, we could calculate the average shape of this dataset. We could then encode a deviation from the average. And using this encoding, we can move around and change the shape appearance of this average body we've calculated: very similar to a latent space. If we could encode shape, pose, and potentially an action, we could then add these on top of the average body we have calculated and get the final model with the pose, shape, and action we want.

In its essence, a parametric human model aims to allow editing of a whole human mesh, with its many vertices, by using only a limited number of parameters. If you've dealt with machine learning and/or statistics, then you might already be guessing what we'll be using. We can leverage Principal Component Analysis, or PCA for short, to generate these control parameters. Here's a link if you'd like to brush up on your PCA knowledge.

So how would we create a PCA basis for the shape? First, you would get your dataset and fit them to the same topologically consistent template. You can think of this as similar to subtracting the mean when calculating the PCA of a dataset. We can think of our data as a really large vector of vertex coordinates for each body data point we have. Using these vectors, we can calculate the average vector, principal components, and the standard deviations. In terms of code, it would be something like this:

vertices = mean_shape + sum(coeff[i] * component[i] for i in range(n_components))

vertices = vertices.reshape(n_vertices, 3) # Convert to 3D coordinates

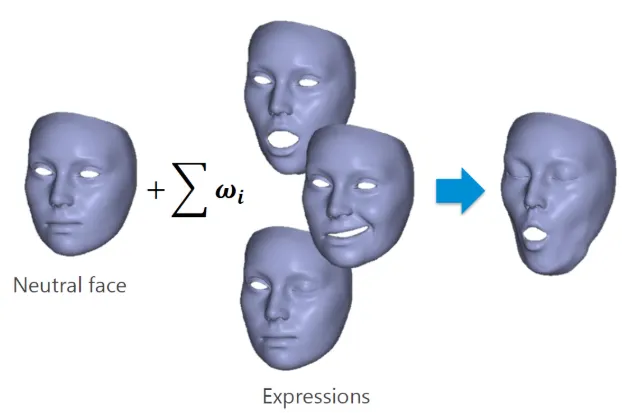

Let's take a look at parametric face models, since they have nicer visuals and tools we can play around with. All the ideas can be transferred from face models to body models with some additional detail, so we're covering all the bases. After collecting a shape dataset and, in the case of faces, an expression dataset, and creating the PCA parameters for those, we can create various different faces. Of course, there are downsides to PCA-based approaches. Two of the most obvious ones are: parameters have global influence and there are no semantic deformations. This means we're unable to have fine control over the characteristics of the face or body.

Blendshapes

The identity aspect of the face and model generally uses PCA. But for both body and face models, lately the "blendshapes" approach is more popular for expressions. It is an additive modeling approach for the face or body. Additive meaning you can combine and add expressions with weights to have more control. Although keep in mind this approach requires us to also collect expression data, in addition to the regular 3D scans in a neutral expression pose. So, the facial deformations are broken down into additive components, where each blendshape represents a specific type of movement (jaw open, lip pucker, blinking, etc.):

An actively used model for faces is FLAME (Faces Learned with an Articulated Model and Expressions). They also have a web tool where you can play around with the faces that the model can generate. Here's the link.

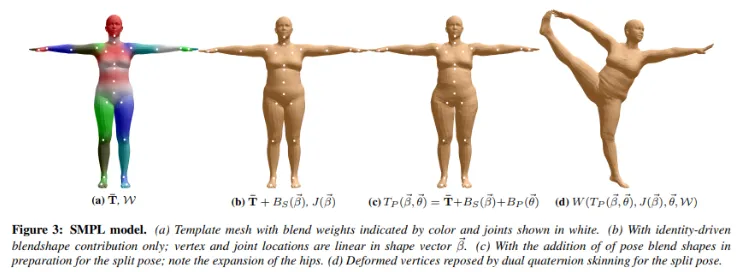

And for bodies, SMPL (A Skinned Multi-Person Linear Model) and its iterations are used. SMPL combines PCA for shape control with pose-dependent correctives for realistic deformations. One of the figures from the SMPL paper explains the components perfectly:

Conclusion

In this short blog post, we only scratched the surface of digital human models. To dive deeper into more advanced topics, it's necessary to understand the base models that are widely used. One of the biggest benefits of models like SMPL and FLAME is that since they're parametric, their parameters can be used in optimization tasks to fit a certain person, pose, or expression. This opens up a wide variety of techniques from deep learning that can be used, like fitting a face model to a picture of someone, facial animation transfer, or pose transfer from the whole body. Since I'm starting to actively work in this area once again, I'll be revisiting lots of topics and writing about them as I find time. Feel free to suggest any direction; I am open to suggestions! Feedback is always welcome as well!

Next Up

- Applications of deep learning that leverage face and body model:

- Hair-related approaches

- Clothing-related approaches

- Facial animation transfer, body pose transfer

- Neural face and body models

- GANs, NeRFs, and Gaussian Splatting-based approaches

- Whatever my attention span catches